User Instructions Before

User Instructions After

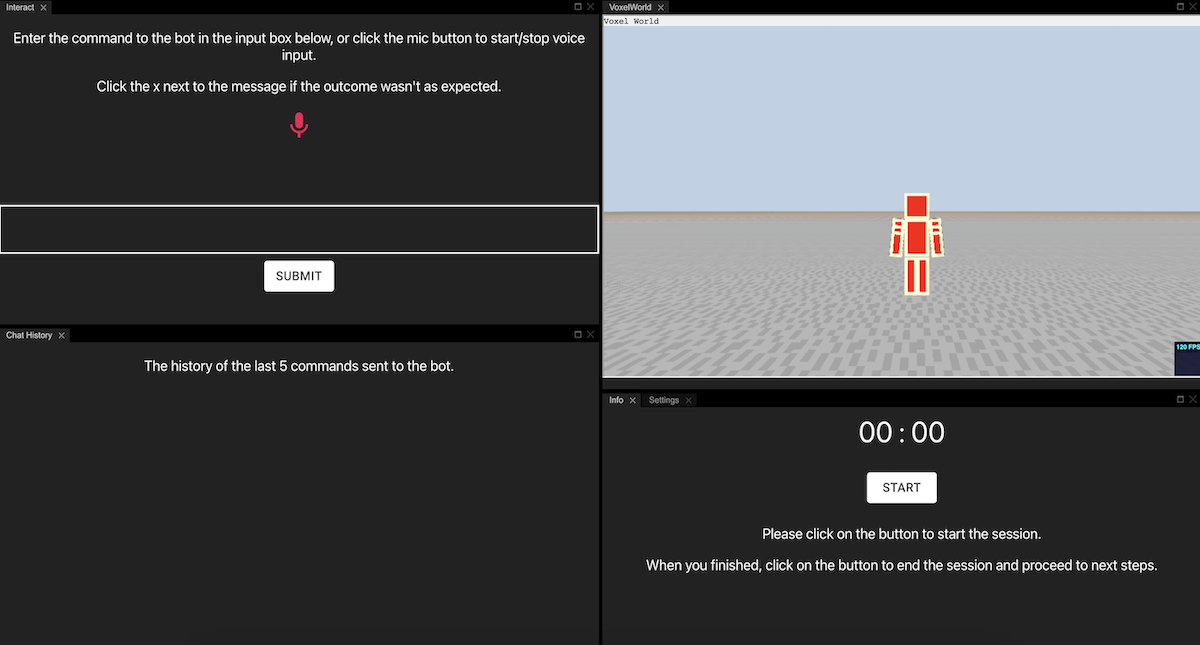

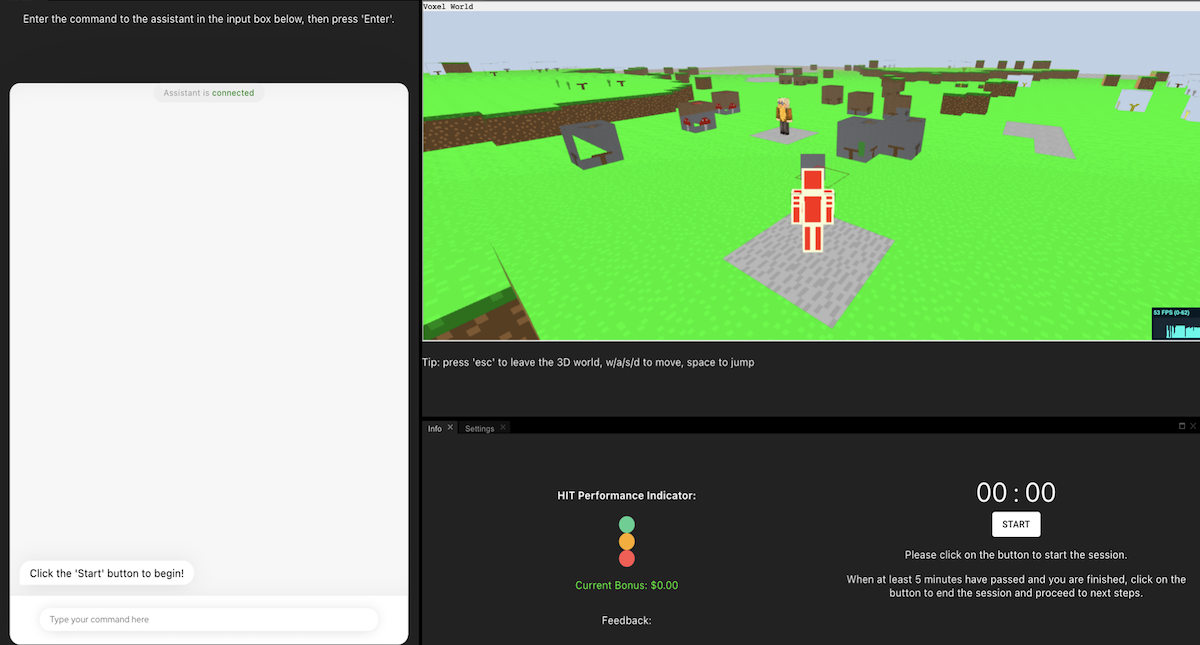

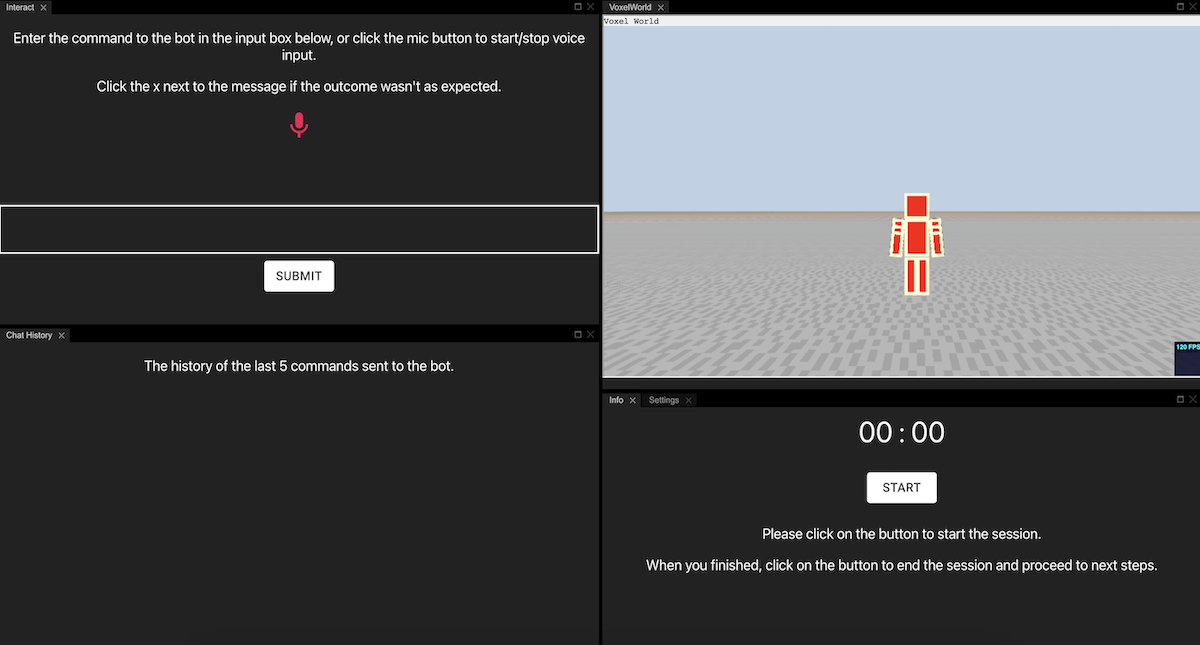

Interaction Dashboard Before

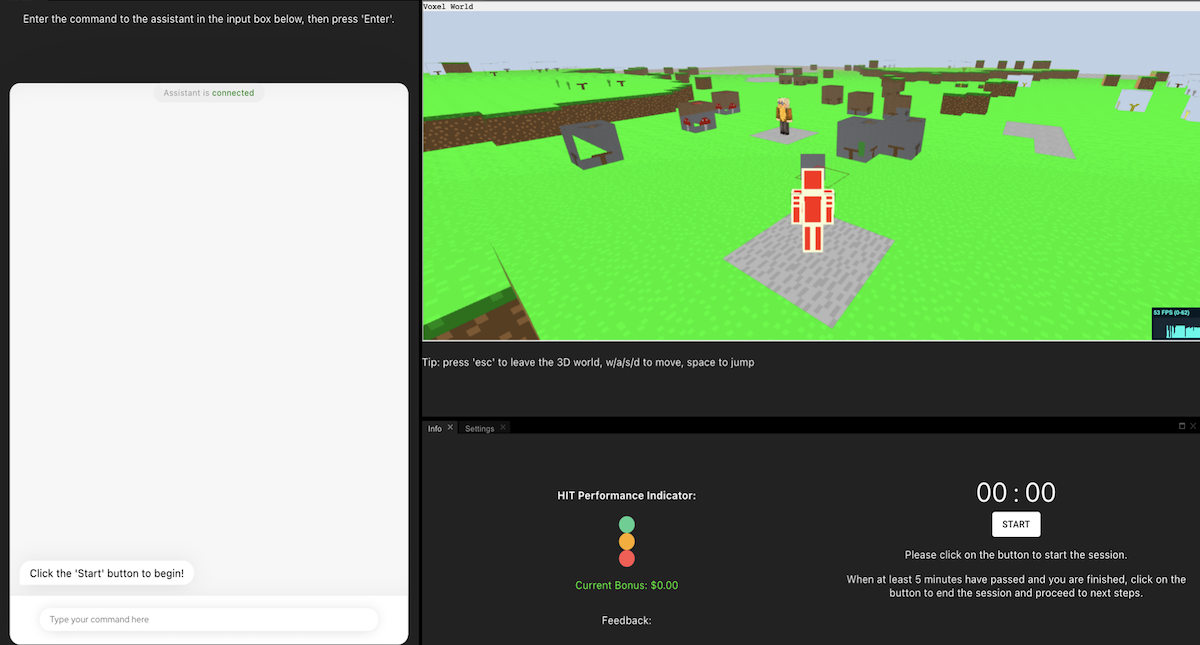

Interaction Dashboard After

Droidlet is an open source AI research project in the FAIR Labs research group at Meta. The Droidlet research objective is to study heterogenous learning in modular assistive agents. I joined the team as an AI Resident in August, 2021 to improve the interfaces between crowd workers and the agent. During my time there I lowered data collection costs by 74%, created a new 3D voxel-based annotation interface used by hundreds of crowd workers, and launched a new agent interaction feature: multi-turn dialog.

The primary workstream I was involved with on the Droidlet team was to improve the agent human-in-the-loop learning pipeline. At a high level, this was a pseudo-automated data collection and retraining pipeline - crowd workers would interact with the agent via Amazon Mechanical Turk, then mark when errors occurred and attribute them to the correct agent submodule (language, vision, etc.) A different set of crowdworkers would annotate these interaction data, growing the agent training data set.

Working with humans in the loop involves challenges that go beyond model architectures and learning algorithms. Apart from making tools that are effective for cooperative people, it was necessary to plan for annotators that will sometimes behave erratically, or even adversarially. Cheating workers could avoid doing the task at all, try to game the task qualification criteria, or simply do the bare minimum to pass but not engage with the task. Even workers who are not acting adversarially can present challenges to development. They may not understand the instructions if not presented clearly, their knowledge of the English language may vary, and they may not have a strong aptitude with technology to navigate the interface. These constraints necessitate a focus on usability and user testing throughout the life cycle of the project.

The improvement rate of the agent's constituent models was a function of the quantity and diversity of system errors, and was constrained to the first order by the resources available to fund interactions with the agent. Therefore, the goal of my work on the Droidlet team was to efficiently generate and correctly mark as many high quality errors as possible in each interaction, and a focus on interface usability was critical to this end.

I joined the Droidlet team as part of a one year AI research Residency. The initial phase of the Residency involved joining an existing workstream as a contributing engineer, then in the final third of the Residency I proposed, scoped, and completed my own research workstream. Thogether, these two phases were made up of three (relatively) distinct projects:

1. UI/UX Experiments - I was tasked with improving the usability of the React user interfaces used by crowdworkers to interact with the Droidlet agent, with the goal of improving data collection efficiency.

2. Voxel Annotation Interface - At the time that I joined the team, only one of the agent's modules had been neuralized: the natural language model, a neural semantic parser. My second project at Droidlet was to build the annotation interface for the second neural module, vision. The heuristic vision code was replaced with a 3D ConvNet that consumed voxel data, and I owned the interface to annotate vision model inferences.

3. Multi-Turn Dialog - Prior to my work, the Droidlet agent did not have strong conversational abilities. The user would issue a command, the agent would try to interpret and carry out that command, and would then either succeed or fail. The research project that I proposed was to allow the agent to ask for clarification using natural language. This would allow the agent to preemptively overcome unknown synonyms, failures of the semantic parser, or failures of memory.

In any resource limited environment that involves humans, improving the usability of the system can be the key to unlocking results at scale.

The Droidlet project has it's own research objectives, but it is also an open source project meant to be a tool for Embodied AI researchers at large. Below you can find papers on the language interface (NSP), the agent design, and the human-in-the-loop training pipeline. On the final of these, I serve as the second author.

Neural Semantic Parser Droidlet Agent HiTL Paper (most recent) Github Repo