The Turing Test was developed by Alan Turing in 1950 as a benchmark for AI development. In simple terms, the Turing test is passed when a panel of humans can’t tell the difference between a human or a machine chatting through text.

In 2014, a chatbot named Eugene Goostman officially passed the Turing Test for the first time, though it did so by pretending to be a thirteen year-old child from Ukraine in order to mask poor language skills. What’s notable about this, however, is that it’s only been six years since then and the Turing Test is essentially obsolete. Anyone with the relevant API can make a bot that is very, very hard to tell apart from a human (52% of people can tell, on average). The Guardian wrote an entire article with GPT-3 last month, a model which I’ll say more about in a moment. You can play with GPT-2 (the GPT-3 predecessor) through this web interface called Talk to Transformer to get a sense of how accurate it is. The GPT-3 API is not yet publicly available.

The crazy thing about the GPT models is that they’re not intelligent, at least in the way most people think about intelligence. They don’t “know” anything, at least in the way that most people think about knowing. When you type in “10+10=” and it spits back 20, it’s not doing any math. And when you ask it to write code for a button that looks like a watermelon, it doesn’t know anything about code, buttons, or watermelons.

Let me take a tangent to unpack that a bit, because in some ways it’s an incredible coup within AI.

If you were to start building an AI from scratch with the goal of pretending to be human, you might think that you would build an underlying structure that is capable of memory, inference, logic, etc. and then teach it a whole bunch of stuff about the world. Maybe it would be useful to have vision and hearing sensors to get a better sense of what objects and concepts “are” and how they relate to each other. Maybe you would try to give the machine theory of mind in order to understand social interaction. Maybe it even would need structures that resemble biological brains.

It turns out that the robots that are currently most successful at pretending to be humans do none of that. GPT-3 does one thing: given the previous words, predict the next word. It was pre-trained on a whole bunch of text from the internet. It takes in the prompt that you give it, and uses it to predict, over and over again, which word is most likely to come next. So when you type in “10+10=” it has enough previous examples to statistically determine that the next characters should probably be a ‘2’ followed by a ‘0’. GPT-3 can do addition with three digit numbers at greater than 80% accuracy, which is truly incredible because it’s likely that the model never saw that specific combination of numbers in training.

This method of learning is very cool and also very problematic. It turns out if you train an AI on a bunch of stuff that humans wrote it will inherit human racial and gender biases. This is a very hot topic and active area of research because no one knows how to fix it yet.

Anyway, GPT-3 is wild and you can go down a real rabbit hole with this stuff. This is a great post if you want to go in more depth.

For the rest of this article, I’m going to let go of the Turing Test and computers explicitly pretending to be people. I’m interested in how we build products that have a great user experience, and for that purpose a slightly lower bar is justified.

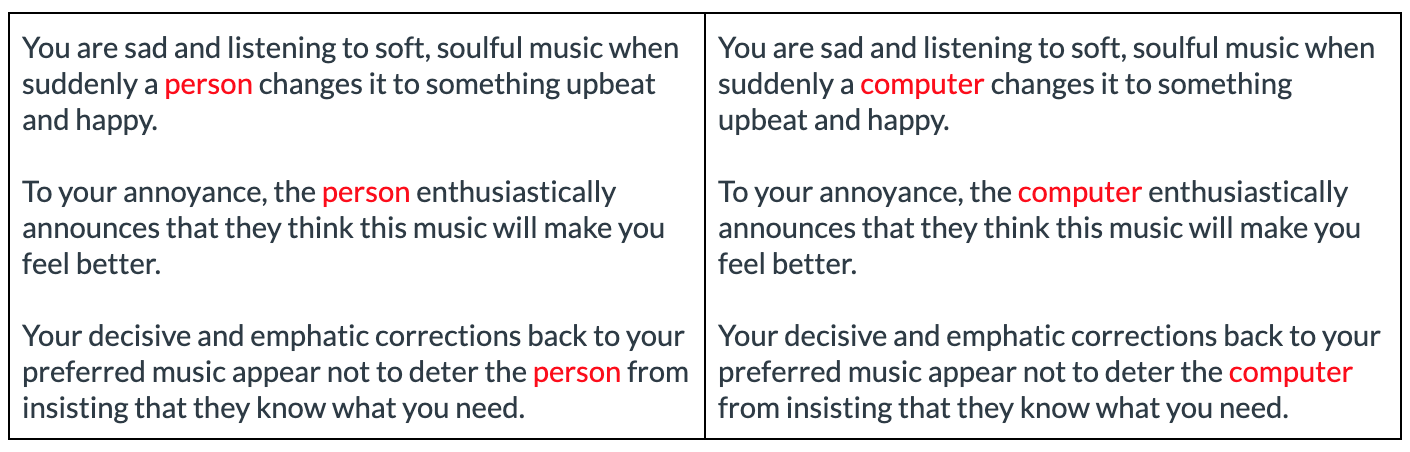

The Media Equation is a tool for evaluating how we anthropomorphize computers, when we expect them to behave like people and when we expect them to behave like machines.

If you think hard enough, you can almost certainly come up with an example of an instance recently where you expected your computer, phone, or other device to behave like a person. Perhaps you got annoyed because it couldn’t read the inflection in your voice, or tell that you were busy, or figure out that you’re not in the mood for a romcom right after a breakup. We are hard pressed to avoid treating even rudimentary agents as if they should be emotionally intelligent.

In order for a device or interface to be emotionally intelligent, the first task is knowing what emotion to respond to. However, reading human emotion accurately is extremely difficult. Even other humans aren’t that great at it when they have access to high fidelity sensory information and the capacity for empathy on top. If you ask people to identify what emotion someone in a video is experiencing, they will get it right ~70% of the time for the obvious emotions, and even worse for the subtle ones.

However, there are a surprising number of tools already in the experience designer’s tool belt:

—If you have access to a video camera, you can retrieve heart rate, heart rate variability, and other physiological signals that are correlated with emotional state, in addition to the more traditional facial movement.

—You can predict some changes in mood using only location data.

—You can use contextual evidence based on interface actions, eg. how quickly they move the mouse or how curt their vocal instructions are.

However, GPT-3 shows us that maybe the ground-up method is not always the most efficient given the tools we have at our disposal and the ethical privacy concerns around monitoring our users. Generative algorithms can converge on the same solution (an emotionally intelligent assistant, device, or interface) without the designer needing to build in a deterministic understanding of emotion.

To be clear, this is speculative. No one has built such a system yet, to the best of my knowledge. But there is reason to believe it is possible.

First, GPT-3 already shows signs of parroting emotional intelligence. Much of the writing that it was trained on was written by humans operating in social environments. It can write halfway decent poetry, even. It’s not crazy to think that a model similar to GPT-3 could produce an emotionally intelligent response with high reliability if specifically designed to do so.

There also is a version of this out in the wild that most of us are intimately familiar with: the Google and Facebook ad recommendation engines. It’s a common suspicion that the big tech companies are listening to us all the time through our phones and when they hear us mention a product, they then advertise that product to us online. This is plausible, but not actually what’s happening.

What’s happening is that they have a big predictive model of your behavior that has been trained on all of the past decisions that you’ve taken online: the things you’ve clicked on, the products you’ve purchased, the groups you’ve joined. Everything that’s legal and possible for them to capture goes into a virtual simulacrum of “you.” All that needs to happen then, is for Facebook to ask their virtual version of you what it would like to buy, and then advertise those products to the real you. Every once in awhile the real you and the virtual you talk about the same thing at the same time and it’s pretty creepy.

The ethics of this are up for debate, but we could leverage the same convergent predictive modeling to improve our relationships with technology. Imagine a model which has been pre-trained on user interactions. You don’t need to label the data, you can just feed in the history so that it develops the ability to predict that Y interaction is likely to come after X. It may be that this pre-training would need to be specific to the interface, but given the flexibility of GPT-3 I suspect that there could be at least nominal transferability.

Your phone already has an immense amount of context on your behavior that it can use as a prompt for the model. There could be an application that runs natively and privately on the operating system of the phone that can share your emotional needs with the devices that you choose in order to improve the user experience of those products. Emotionally intelligent devices could bring us:

—A step change in the accuracy of recommendation engines, eg. Netflix and Pandora

—Devices that are capable of coaching us, eg. helping with interview prep or encouraging us to lose weight

—Games that are responsive to emotional state, eg. a thriller that wants you to be scared but not so scared you quit

Our users already treat our devices and applications as if they should have emotional intelligence. It’s one of the great opportunities in human-computer interaction to close that gap.